Facebook is, without a doubt, a major attention-grabber.

It remains one of the most popular social media networks on the planet, with billions of active users. These users share content of all kinds, from videos and memes to photos and news stories. In fact, every minute there are approximately 3 million pieces of content shared.

Facebook is heavily skewed with news stories and updates. While other platforms, such as Instagram and Snapchat, are filled with images and videos, (and you’ll find that on Facebook, too) Facebook is the place where you are most likely to find news content being shared. There are several possible reasons for this:

- Facebook captures a large and varied demographic.

- The platform lends itself to easy sharing and lengthy discussion (no response length limits like Twitter).

- Users tend to spend significant time on Facebook, with most US adult users report visiting the site at least once per day (74%).

Whatever the reasons, Facebook leads the way with news content. In fact, 68% of Americans claim they get their news from Facebook. This is higher than the 41% surveyed in Australia, which has decreased from 45% since 2016. Yet even 41% represents a significant portion of the population who receive their news updates via Facebook.

Unfortunately, here’s with the problem arises.

Just how accurate is this news?

The power of social media is evident. We can see it most easily when we’re faced with something that has ‘gone viral.’ Users simply have to share an item that piques their interest, and it is immediately spread out to their followers, who can then share the item, and so on. This explains why a cute video of a panda can get millions of views in just one day.

There’s no harm in the endless sharing of an adorable panda video. However, when it comes to the sharing of news stories or “fact-based” posts on social media, things can start to get sticky.

What happens when a fake story spreads like wildfire? What takes place when an infographic filled with misinformation makes the rounds? What transpires when a false statement (especially one that is emotionally charged) keeps circulating?

The cultural, societal, and ethical implications are endless.

Not to mention, when fake news is everywhere, it becomes increasingly difficult to separate fact from fiction. How can you know what is true? What networks can you trust?

The Fight Against Fake News: Facebook’s Path

Facebook is far from the only social media platform that is subject to the appearance of fake news. Yet its popularity among the masses (and perhaps its infamy as a platform where political debates are likely to crop up) make it stand out as one of the worst offenders.

Fortunately, Facebook executives are not unaware of the problem. And they have actively been taking steps to stop fake news in its tracks, attempting to combat the spread of misinformation on their platform.

Let’s take a look at what they’ve been doing.

The fight against fake news seemingly began in 2016. Concerned about the excessive dissemination of inauthentic articles, doctored images, and more circulating on Facebook, the company announced that it would ban fake news sites from utilising its ad network. Cutting off these sources from accessing ad revenue was a powerful first step, and it demonstrated clearly that Facebook will not support this type of misinformation.

While it was a noble start, this article from BBC News reminds us that both 2016 and 2017 were years filled with fake news. Widely shared articles stoked terrorism fears, spread false meteorological information, and largely exaggerated death tolls for incidents like London’s Grenfell Tower Fire. (One video that made false death toll claims was viewed more than 6 million times). Clearly, misinformation spreads fast, easily, and readily.

Facebook, in particular, received harsh criticism for allowing the spread of fake news in 2016. Following the US election, Facebook came under fire for allowing the viral circulation of many false, often inflammatory political articles. This rampant fake news, it has been argued, quite possibly influenced the outcome of the election. While Mark Zuckerberg found the claims to be ‘crazy,’ the evidence that some articles were shared millions of times makes it hard not to conjecture that this could have played a role, large or small.

With mounting pressure, Facebook declared that it would start flagging fake news stories. This would be done with the help of its users, who could notify the platform if an article or post appeared to be fake. Once enough users had flagged the post, Facebook would turn to external fact-checking organisations to verify its authenticity. Items that are confirmed to be fake news wouldn’t automatically be removed from the site but they would likely appear lower in the newsfeed. Such items would also be emblazoned with a note that read: “disputed by 3rd party fact-checkers.” Users could click for more information on why the item was disputed, and while they can still opt to share this article, another warning will appear, reminding the user that this item has questionable reliability.

Did this type of flagging work? In some cases, it may have, but in other instances, the system worked imperfectly or even clearly backfired. Some articles that had been disputed were not marked as such. Those that were marked occasionally aroused anger in various groups. One conservative American article that had been declared fake news caught the eye of conservative groups, who opted to continue sharing the piece like wildfire, spreading it even faster and further.

2018: Enter the robots. In June, Facebook shares that it is increasingly utilising automated, machine-learning technologies in addition to human fact-checkers.

In September of 2018, Facebook announced that this complex technology would now be accessible to its 27 fact-checking partners across the globe. Facebook said that machine learning was the driving force behind this tool. Based on various “engagement signals,” the technology could better identify and remove posts that are likely to include falsified information. One of these engagement signals is the feedback that users themselves can provide when flagging fake news. Automation can then discern if a flagged post has duplicates. The software can locate other links or domains promoting the same misinformation. Since items get shared over and over, this seems to be a logical approach to tackling the spread of fake news. The assistance of machine technology also reduces the burden on third-party fact checkers, who can then provide the final word in authenticity, once items have been vetted by the ‘bots.

Taking things a step further, this "machine-learning technology is also being deployed to identify and demote the visibility of pages that are likely to spread financially-motivated hoaxes, typically set up by businesses, political parties or other organisations.” Overall, the software makes solid promises.

Another element has been put in place to rank the trustworthiness of Facebook users who flag content as fake news. Using various data points, a user has a trust score assigned to them which lets the automation know if they should be considered a reliable source for flagging misinformation. This could be useful in ensuring reports are authentic, weeding out those users who flag articles that they merely disagree with.

Battling Fake News in 2019

What about today? How does Facebook prevent the pervasion of fake news on its platform in 2019?

In many ways, we’ve definitely come a long way from 2016.

One ongoing study on these trends suggests that “the overall magnitude of the misinformation problem may have declined, at least temporarily, and that efforts by Facebook following the 2016 election to limit the diffusion of misinformation may have had a meaningful response.” Yet this study also includes references to reports that fake news is still a problem on Facebook, and points to various examples of major fake news sites on Facebook that have experienced little to no decline in user engagement.

This article from Mashable suggests we should be optimistic about Facebook’s efforts, but it also outlines some of the issues facing the fight against fake news. Fact-checking can be a tough challenge, but it is especially so in countries where misinformation is widely pervasive, such as the Philippines.

Other Efforts to Fight Fake News

Facebook isn’t the only company endeavouring to combat fake news. Other platforms and service providers are taking similar steps to protect the authenticity of the content shared.

Twitter is one platform that has not received high marks for its efforts. Many articles lambast the failure of Twitter to fight fake news, but some sources also report that fake news on Twitter is not as prevalent on Facebook.

Microsoft has just recently announced (in partnership with NewsGuard) that its Edge mobile browser will include warnings for potential disputed/fake news. Last year they began offering it on the desktop version of Windows 10. The warnings are still an optional feature for users. While Edge isn’t widely used, this is an important step forward in the fight against fake news and could be a model for other companies to adopt.

How to Spot Fake News

Despite the best efforts of Facebook (and other platforms), it’s likely that you are going to come across fake news in your time on the Internet. And chances are very, very high that you’ve already encountered fake news several times on the web. So how can you learn to spot it?

Here are some things to keep in mind:

Pay attention to URLs

Sometimes fake news sites have complicated or lengthy URLs. While this doesn’t necessarily label them fake news, most well-known (and well-respected) organisations use concise URLs and brand names. If a URL feels suspect, it probably is.

Is this the only source reporting this story?

Perhaps you come across a shocking and outlandish article. Could its information be true? One quick way to gauge the authenticity of a story is to see if other trusted news outlets are reporting it. A quick Google search can usually do the trick.

You can always do your own fact-checking

Sources like Snopes.com are designed to help users easily debunk fake news or hoaxes. If in doubt, dig a bit deeper.

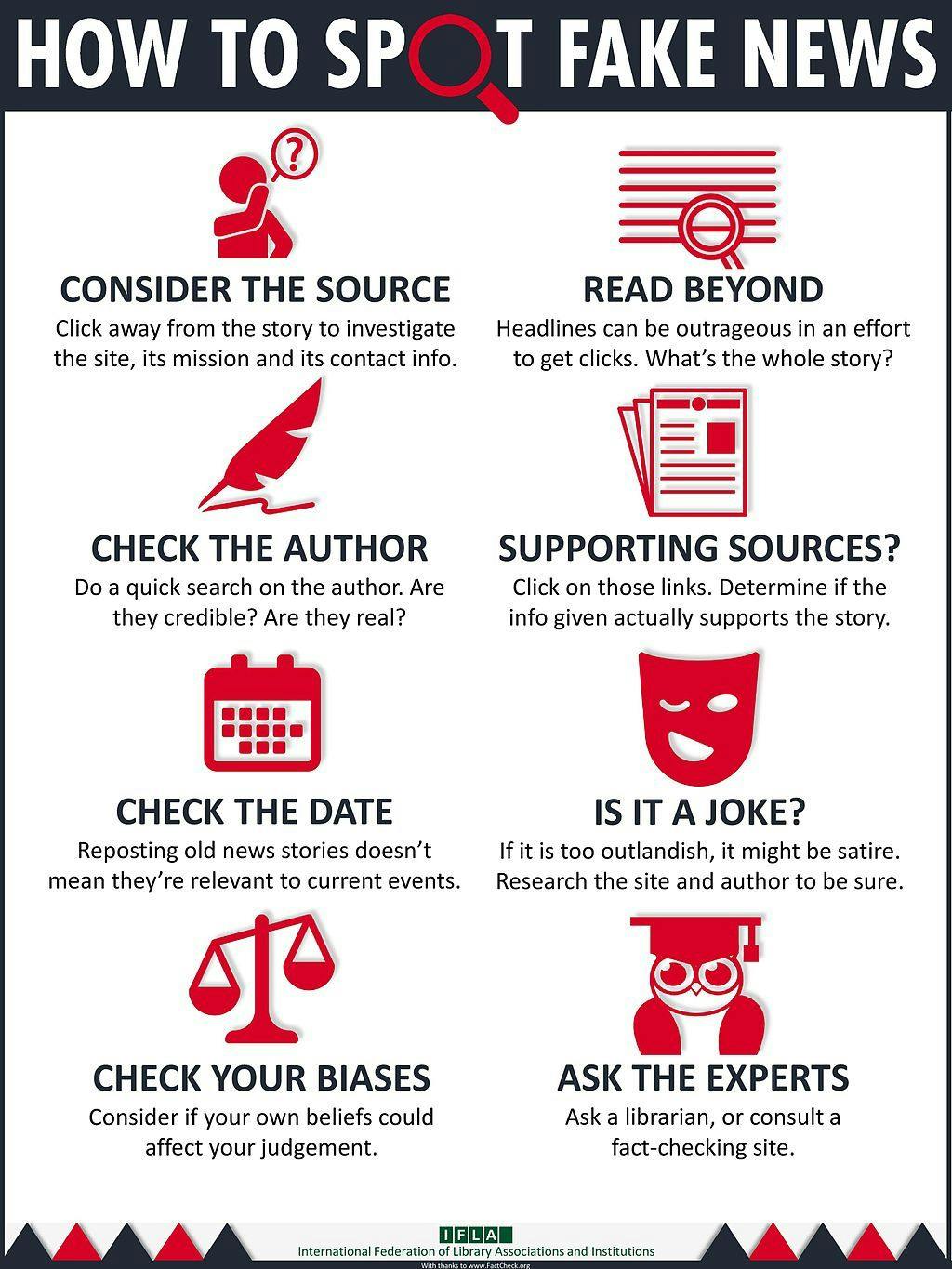

This helpful infographic from IFLA can also offer guidance.

This infographic drives home the importance of verifying the trustworthiness of authors, the sources they are linking to, and the source itself. Checking the date of a post can also be illuminating. And of course, it’s necessary to remember that there are satirical news sites out there. Some pieces are not designed to be believed as fact, but occasionally can be misleading.

Perhaps most importantly, this infographic reminds us to be prudent and careful consumers of news and information. It’s important to read beyond headlines (which can frequently be shocking click-bait type headlines) and consider the whole story. At the same time, it’s a good idea to look at other news stories on a given site. How do these hold up? Does the site seem to share information that no other news source provides? This could be problematic.

The infographic also reminds us to check our personal biases, which can be challenging, but is incredibly important. Regardless of your opinions and beliefs, genuine news will be factual in nature, and it’s vital to separate our biases from fact.

It seems that fake news is likely to stick around for a while. But in many ways, this is not surprising, since as humans, we’ve always been drawn to gossip, rumour, and controversy. It’s possible that as machine learning increases in its power, the pervasiveness of fake news will eventually start to fade.